Optimizing costs in GitHub Actions

Recently in EasyBroker we decided to migrate our continuous integration system to GitHub Actions.

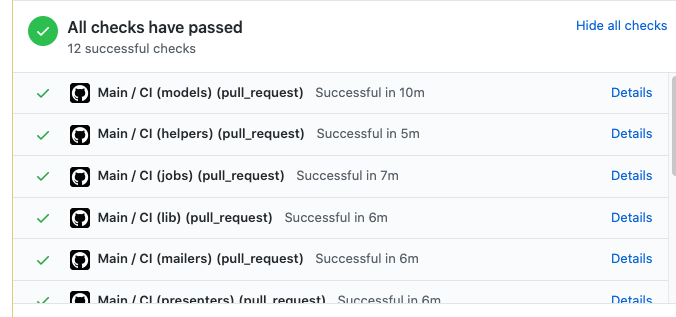

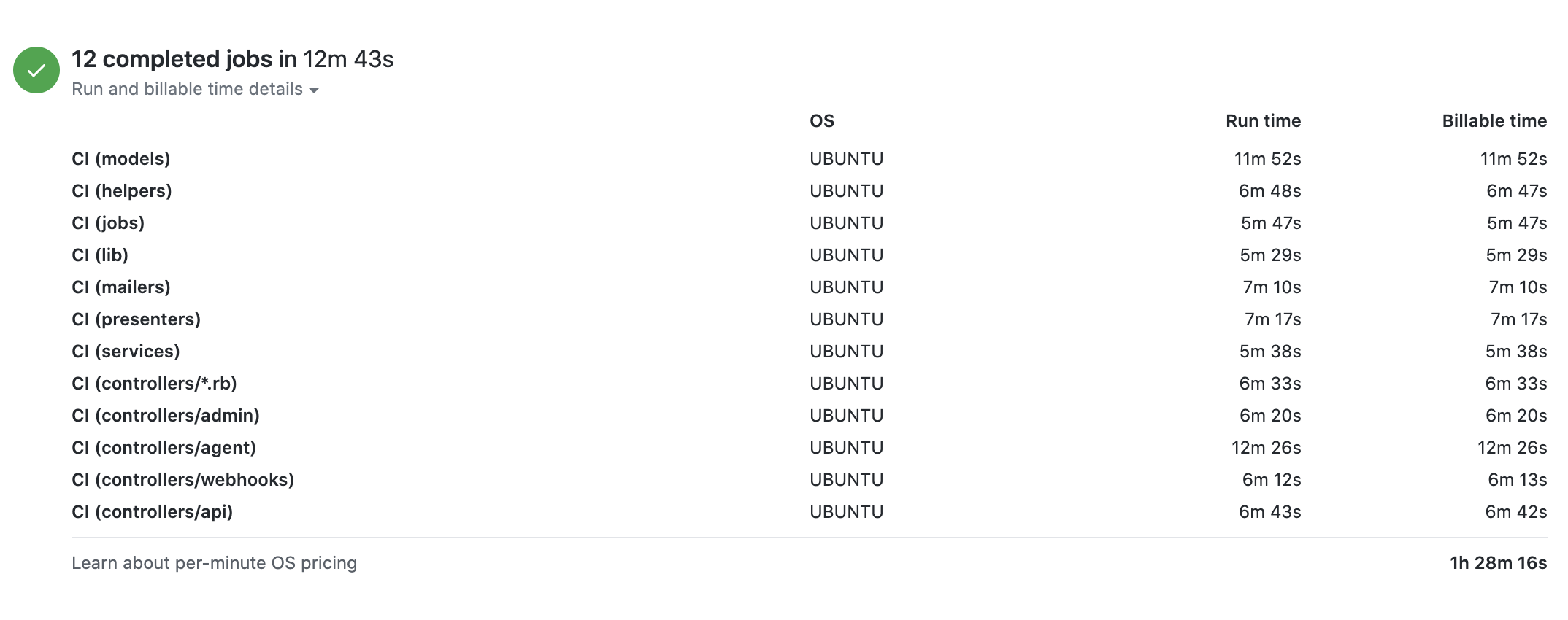

Our first setup was a workflow with 12 containers considering a good idea to quickly identify errors in our tests.

The average time per container was about 6 minutes, while only 2 containers in specific (models and a group of controllers) took about 12 minutes.

After a week working with this new implementation, we received a message from GitHub warning us about we were near to consume all the minutes of the free tier that they provides 💸💸💸, so we decided to investigate.

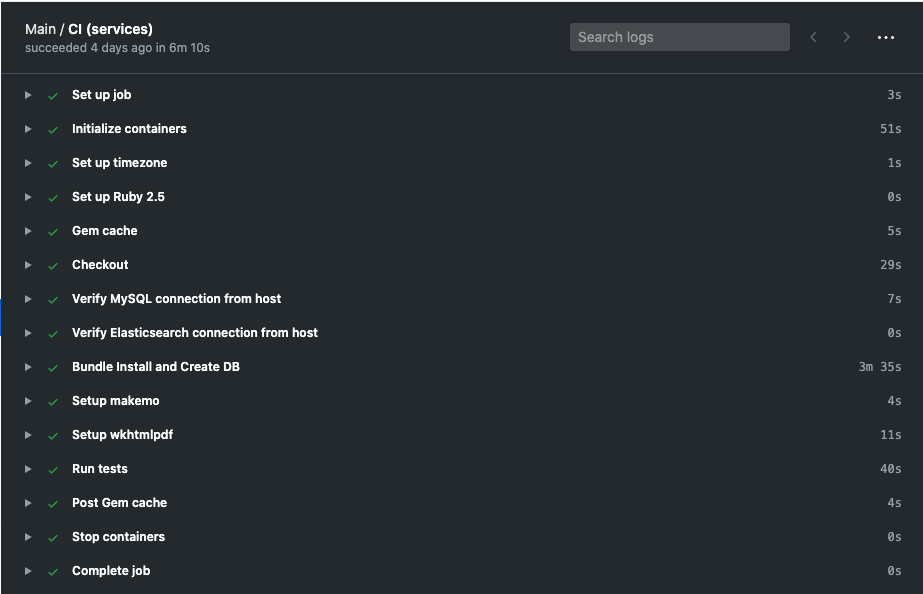

First of all, we noticed the 10 containers that took 6 minutes to complete the task, 4 minutes were for setup.

Later we found despite we had the cache "configured", our container was downloading the gems over and over again.

And finally, the tests were taken only between 20 and 50 seconds of the process.

Saving some minutes

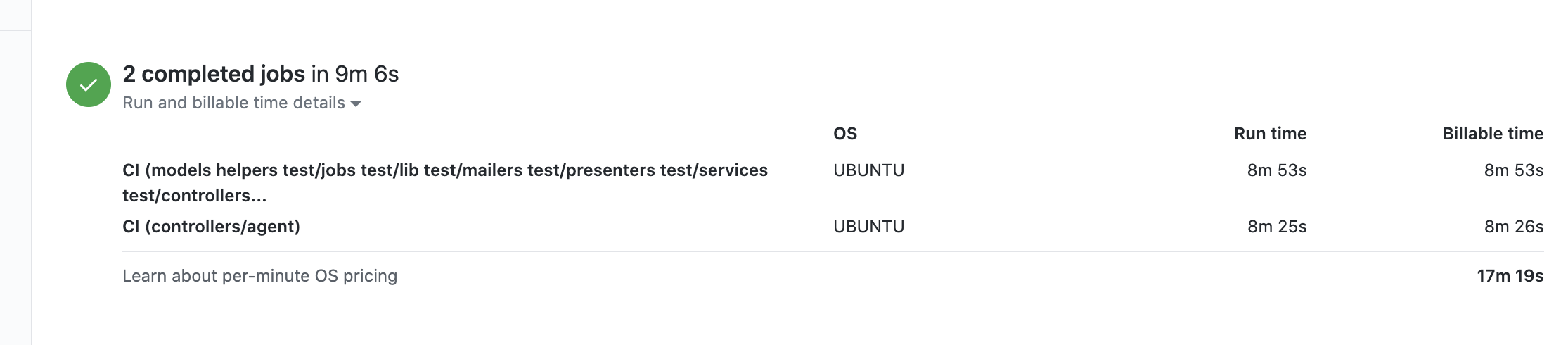

The first change that we did was going from 12 containers to 2. Considering the agent controller tests takes most of the time, we decided to merge the models container with the other 10 containers that were taken between 20 and 50 seconds to complete the tests, ending with a configuration like the following.

Before

strategy:

fail-fast: false

matrix:

test_folder: [models, helpers, jobs, lib, mailers, presenters, services, controllers/*.rb, controllers/admin, controllers/agent, controllers/webhooks, controllers/api]

Now

strategy:

fail-fast: false

matrix:

test_folder: [models helpers test/jobs test/lib test/mailers test/presenters test/services test/controllers/*.rb test/controllers/admin test/controllers/webhooks testcontrollers/api, controllers/agent]

The next change was updating the cache setup to make it work, our configuration was using the version 1 of actions/cache and we were including the restore-keys option, the documentation mentions that this input is optional.

- name: Gem cache

uses: actions/cache@v1

with:

path: vendor/bundle

key: {% raw %}${{ runner.os }}-gems-${{ hashFiles('**/Gemfile.lock') }}{% endraw %}

restore-keys: |

{% raw %}${{ runner.os }}-gems-{% endraw %}

So I updated to version 2 of the action and I removed the restore-keys input considering is not necessary at this moment.

- name: Gem cache

uses: actions/cache@v2

with:

path: vendor/bundle

key: {% raw %}${{ runner.os }}-gem-use-ruby-${{ hashFiles('**/Gemfile.lock') }}{% endraw %}

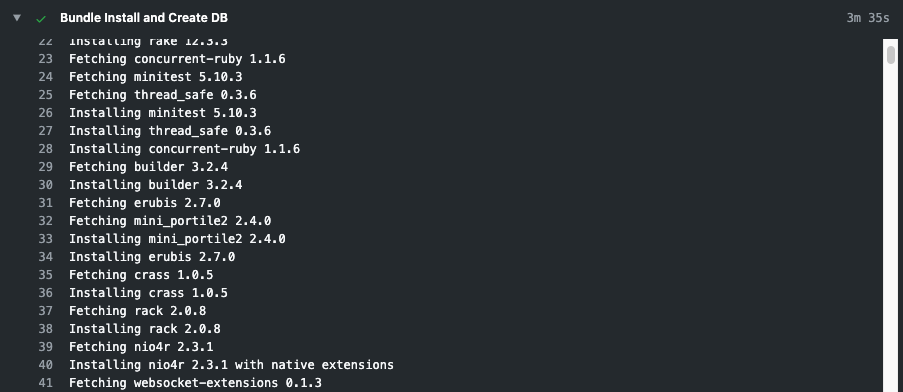

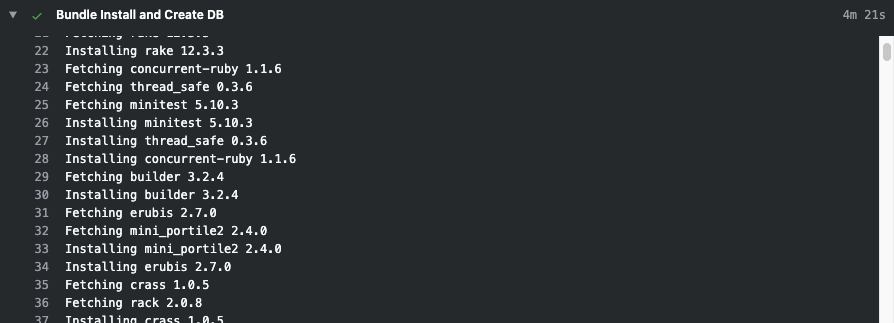

In addition to that, reviewing the step where the dependencies are installed, I found that we had it merged with the step to create the database and it showed us a deprecation warning when executing bundle install with the flag to indicate the installation path of the gems.

- name: Bundle Install and Create DB

env:

RAILS_ENV: test

DB_PASSWORD: root

DB_PORT: ${{ job.services.mysql.ports[3306] }}

run: |

sudo /etc/init.d/mysql start

cp config/database.ci.yml config/database.yml

gem install bundler --version 2.0.2 --no-ri --no-rdoc

bundle install --jobs 4 --retry 3 --path vendor/bundle

bin/rails db:setup

[DEPRECATED] The `--path` flag is deprecated because it relies on being remembered across bundler invocations, which bundler will no longer do in future versions. Instead please use `bundle config set path 'vendor/bundle'`, and stop using this flag

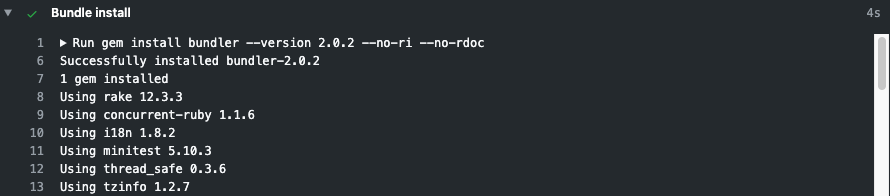

So I opted to separate it into 2 separate steps and update the way the bundler detects the location of the gems.

- name: Bundle install

run: |

gem install bundler --version 2.0.2 --no-ri --no-rdoc

bundle config path vendor/bundle

bundle install --jobs 4 --retry 3

- name: Create DB

env:

RAILS_ENV: test

DB_PASSWORD: root

DB_PORT: ${{ job.services.mysql.ports[3306] }}

run: |

sudo /etc/init.d/mysql start

cp config/database.ci.yml config/database.yml

bin/rails db:setup

With these little tweaks we were able to pass from 4 minutes installing the gems in just 4 seconds.

Before

Now

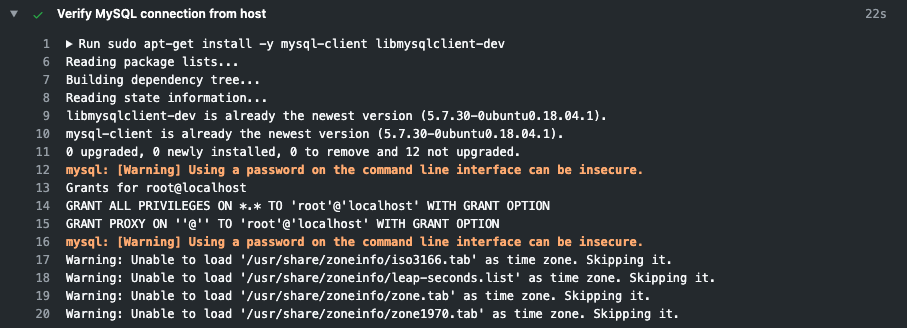

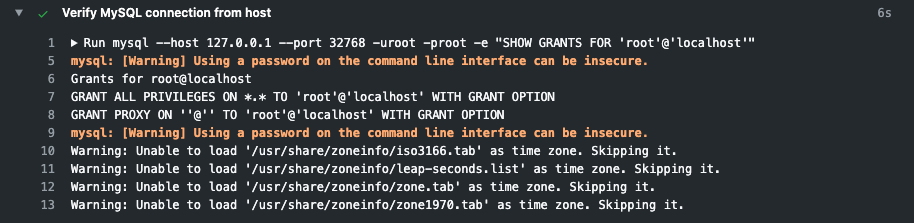

As a final step for this first stage of optimization, I took a look at the steps where we did the installation of some dependencies using apt-get and I found that some calls were unnecessary. For example, when we verified the MySQL connection, we were trying to install the client that is already installed in the container and this took about 20 seconds (between verify the installation and perform the connection test). Removing this unnecessary apt-get call we were able to down the time to 6 seconds.

Before

Now

Takeaways

This was the first stage of our update to have a healthy and efficient CI system, after apply these changes we were able to reduce from about 1 hour and 30 minutes per execution.

To only about 20 minutes.

The experience of this is, in services where the billing depends on the execution time, saving seconds is crucial, and it is important to pay attention to the little details. Like the apt-get dependency installation scenario where the dependencies are already installed in the container. Maybe 13 seconds sounds as nothing relevant, but if you consider the number of builds that you have in your company every day probably you can save 1 or two hours per day and this will be reflected in your billing.

The next step is to try to move the dependencies that require to be installed manually into a Docker container (I still need to investigate if this is possible) and use a tool like TestProf to identify the slowest tests and try to optimize them.